Transfer Function

The connection between a sensor's input and output, or stimulus and response, is represented by the transfer function.

Definition and Importance

A sensor's transfer function is a mathematical representation that shows the relationship between the sensor's measured quantity (generally a physical characteristic like temperature, pressure, or light intensity) and its output, which is normally voltage or current. The behavior of a sensor can be better described by the transfer function.

Factors Affecting the Transfer Function

A number of factors impact a sensor's transfer function, which in turn influences how changes in the measured input correspond to changes in the sensor's output. The kind of sensor and how it is designed determine which parameters are relevant for that specific sensor. Sensitivity, linearity, hysteresis, temperature dependency, frequency response, noise and drift, saturation, resolution, mechanical variables, age, and wear over time are common characteristics that might impact a sensor's transfer function. This chapter will go into more depth about a few of these variables.

Sensitivity

A crucial parameter in the field of sensor technology, sensitivity indicates how much a sensor's output or reaction changes in response to a change in the quantity being measured. If a sensor is represented mathematically as a function, then sensitivity would be the function's derivative with regard to the input. Sensitivity, for instance, is defined as the change in electrical resistance (output) per degree Celsius change in temperature (input) in an electrical temperature sensor.

Definition and Importance

One of the essential properties of sensors is sensitivity, which is the capacity to identify even the smallest variations in the measurand, which is the stimulus or the amount, item, or quality that is meant to be measured. High sensitivity sensors are extremely useful in situations where accuracy and detail are crucial since they can detect even minute deviations. Think of medical imaging systems, for example, where a diagnosis can be changed by a fraction of a degree difference. On the other hand, in systems like a home thermostat, where only large changes are concerning, a low-sensitivity sensor could be sufficient.

Factors Affecting Sensitivity and Its Optimization

A sensor's sensitivity can be affected by several things:

Material Properties: The intrinsic qualities of the material used to make sensor, put a significant impact. Certain materials, for example, may show a more noticeable shift in electrical resistance as a function of temperature, which makes them perfect for extremely sensitive temperature sensors.

Sensor Geometry: The sensor's shape and design have the power to enhance or reduce sensitivity. Compared to bulk materials, thin films may be more sensitive to surface phenomena.

External Interference: Environmental variables or electromagnetic interference are examples of external elements that might create noise, altering the genuine signal and therefore the sensitivity.

System Calibration: Optimizing a sensor's sensitivity requires proper calibration to guarantee that the output precisely reflects changes in the input.

Amplification: The raw output of a sensor may be insufficient in some situations. One way to increase the effective sensitivity is to amplify this signal while making sure the noise isn't amplified too much.

Linearity and Nonlinearity: Contrary to non-linear sensors, which have fluctuating sensitivity, linear sensors have constant sensitivity.

Balancing is frequently required to maximize sensitivity. More sensitivity may increase a sensor's vulnerability to noise or outside interference, even though it is often sought in applications. Hence, to attain the best sensitivity suited to the particular application, significant thought and design modifications are necessary.

Range

In sensor technology, range is a spectrum of values of a physical characteristic that a sensor can measure with reliability, from minimum to maximum. It basically defines the limits of a sensor's functioning range. A temperature sensor, for example, may have a range of -50°C to 150°C, meaning that it can measure and report temperature differences within these limits.

Definition and Importance

For each sensor, range is an essential parameter because it indicates the range of its operation. Selecting a sensor with the right range is essential for numerous purposes. A sensor that has its range exceeded may experience irreparable damage or a shorter lifespan in addition to inaccurate readings.

It is impossible to exaggerate the range's importance. For example, in an industrial context, using a pressure sensor intended for low pressures accidentally in high pressure surroundings could result in dangerous circumstances or equipment breakdowns. On the other hand, low resolution and insufficient sensitivity to slight variations may arise from utilizing a high-range sensor for sensitive measurements.

Factors Affecting Range and Its Optimization

There are multiple factors that affect a sensor's range:

Material Characteristics: An important factor is the intrinsic qualities of the material used to make the sensor. While some materials may be more sensitive, others may be stronger and more resilient to extremes, providing a wider range.

Design and Construction: The range of a sensor can be affected by its overall structure, thickness, and physical layout. For example, pressure sensors with thicker diaphragms may have a wider pressure range.

Calibration: The calibration of a sensor has a direct impact on its range. The sensor's dependability must be guaranteed across its designated range by the calibration procedures used.

Environmental Conditions: The effective range of a sensor may be impacted by outside variables like humidity or ambient temperature. In polar settings as opposed to desert ones, a sensor's behavior could vary.

Electrical Characteristics: The specifications and configurations of the electrical components can be used to establish the range of electronic sensors. A sensor's effective range may be reduced by electrical overload.

A careful balance must be struck between the requirements of the application and the intrinsic qualities of the sensor in order to maximize the range. It is critical to make sure the sensor's range corresponds with the applications for which it is designed, offering accurate readings while preserving the integrity and lifetime of the sensor.

Accuracy and Precision

In the field of measuring and sensing, concepts like accuracy and precision are crucial. Although these phrases are sometimes used synonymously, they have different meanings, and knowing the distinction is important when assessing or creating measuring systems.

Accuracy vs. Precision and their Importance

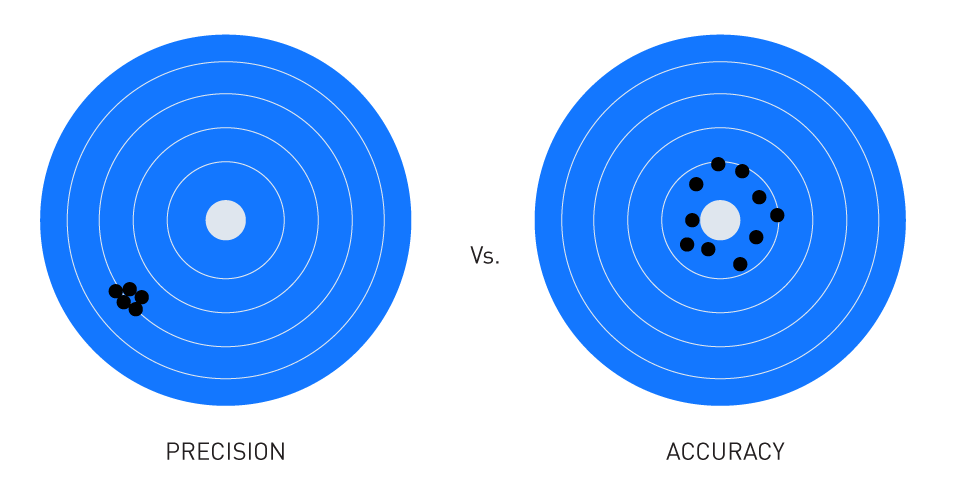

The degree to which a measured value resembles the actual or accepted reference value is referred to as accuracy. What matters is "hitting the target." Measurements from an accurate sensor are generally quite near to the true value.

Contrarily, precision is associated with measurement repeatability. It explains the variance that appears while measuring the same component or parameter more than once. Even when a precise sensor is far from the true value, it can reliably produce the same measurement, hence it may not always be accurate.

It is impossible to exaggerate the significance of these concepts, particularly in engineering applications. Measurements taken with a sensor that is accurate but not exact can have a wide range of accuracy. On the other hand, a highly accurate yet imprecise sensor consistently produces measurements that are off. Both precision and accuracy are desired qualities in many applications to guarantee accurate and consistent measurements.

Figure 1: Precision vs. Accuracy

Factors Affecting Accuracy and Precision

The following variables may affect a sensor's accuracy and precision:

Calibration: Sensors may deviate from their initial settings over time. They stay accurate with regular calibration.

Environmental Influences: Sensor readings might be impacted by variations in temperature, humidity, or other environmental factors.

Wear and Tear: In particular, mechanical sensors are susceptible to wear and tear over time, which can affect precision and accuracy.

Noise: Precision can be impacted by random fluctuations in sensor readings caused by electrical noise.

Quality of Components: Accuracy and precision can frequently be increased by using higher-quality, and frequently more expensive, components.

Systemic vs. Random Errors

Measurement errors are commonly classified into two types: systematic errors and random errors.

Systematic Errors: These mistakes are repeatable and consistent. Biases that are built into the system or defective tools or methods can introduce them. A scale with a systematic inaccuracy, for example, would always register 5 grams of weight. The benefit of systematic errors is that they may be calibrated out or fixed if they are found.

Random Errors: These mistakes fluctuate randomly and are unpredictable. They may result from manual measurement methods, electrical noise, or erratic changes in the surroundings. By taking additional measurements and averaging them, or by expanding the sample size, random errors can be minimized.

In conclusion, it is critical to comprehend and maximize sensor accuracy and precision. It is imperative to differentiate between the many sorts of errors that impact measurements, as correcting them calls for different strategies.

Response Time

When assessing sensors' effectiveness and usefulness in a variety of applications, one of their most important qualities is their quickness in identifying and reacting to changes. This characteristic, referred to as "response time," can have a significant impact, particularly in situations when prompt responses are required.

Definition and Significance

Response time describes how long it takes a sensor to respond to a change in the parameter it is tracking and generate an output in line with that change. The time interval that passes between applying an input stimulus (change) and waiting for the sensor's output to settle at a suitable value that reflects the change is what it basically is. A common criterion for many sensors would be the amount of time it takes for the output to change by one step in input before reaching, say, 90% of its ultimate value.

There are several instances that demonstrate the importance of reaction time:

Safety: Fast sensor reaction times might mean the difference between averting an accident and experiencing a catastrophic failure in applications such as smoke detectors in buildings or anti-lock brake systems in cars.

Process Control: Early change detection can help industries—particularly those involved in chemical processes—maintain process equilibrium, maximize yields, and reduce waste.

Consumer Electronics: Quick-responding sensors significantly improve user experience in voice-activated or touchscreen systems.

Factors Affecting Response Time and Its Optimization

The reaction time of a sensor can be affected by several variables:

Sensor Design: Sensor response time can be influenced by the size, shape, and other geometrical features of the sensor. Less thick thermocouples, for example, have a quicker rate of temperature change detection than more thick thermocouples.

Material Properties: Characteristics of a sensor's response can be determined by the materials' intrinsic qualities. A material's response to alterations in its surroundings may vary depending on its composition.

External Environment: Sensor sensitivity can be changed by variables such as surrounding temperature, pressure, or humidity. For example, compared to sensors in warmer climates, those in colder surroundings may respond more slowly.

Signal Processing: There may be delays caused by the electronics and algorithms needed to analyze and comprehend the raw data from a sensor. But as time has gone on, this has been less of a problem due to the development of quicker electronics and more effective algorithms.

A balance is frequently necessary to maximize a sensor's reaction time. A sensor's heightened sensitivity might occasionally introduce noise, resulting in less consistent readings. Based on application requirements, designers must balance the need for steady, noise-free readings with the importance of quick response.

It is essential to comprehend and adjust the sensors' reaction times. Improved user experiences, safer surroundings, and more effective systems can all result from striking the correct balance.

Hysteresis

Hysteresis is a special place among the many features that characterize a sensor's performance. This phenomenon can cause readings to become inconsistent, particularly when the input from a sensor experiences periodic fluctuations.

Explanation and Causes

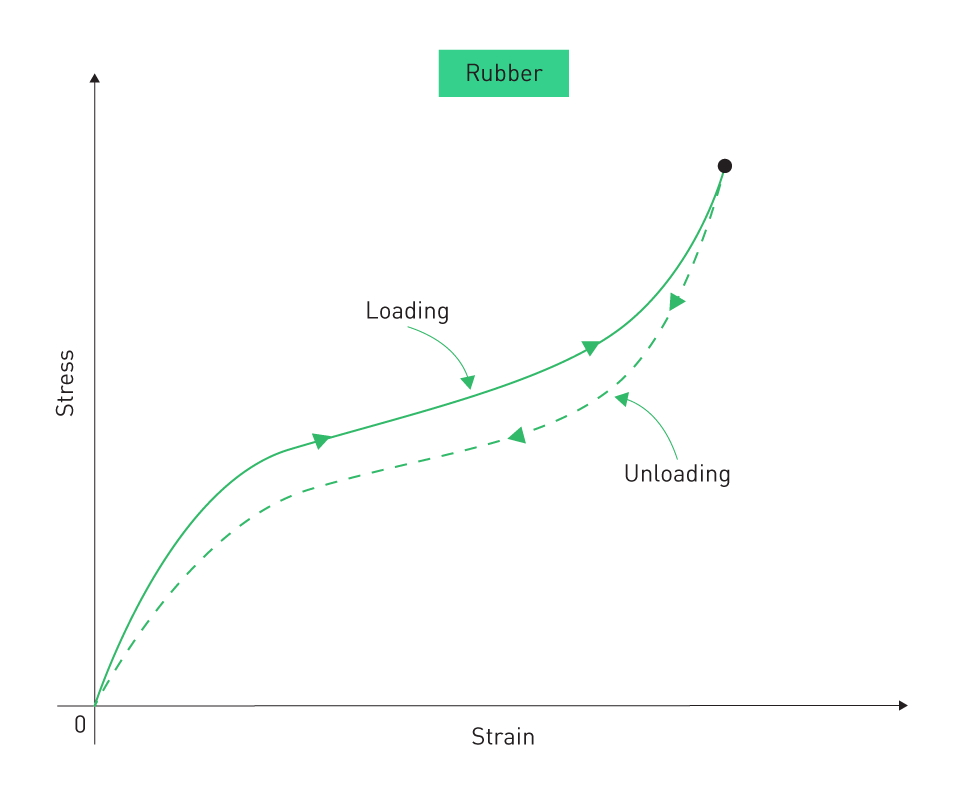

When a particular input level is approached, hysteresis in the context of sensors is the difference in output that occurs from first increasing the input from a lower value and then decreasing it from a higher one. Consider the following situation: if you gradually increase pressure on a pressure sensor from 0 to 100 units, the reading may vary when you decrease pressure back from 100 units to the same intermediate value. Hysteresis is the term used to describe this difference or lag in response.

Figure 2: Hysteresis

A number of things can cause sensors to hysteresis:

Material Memory: Some materials for sensors, especially those with elastomeric and partially magnetic properties, have molecular configurations that cause them to "remember" their past states by nature. It is possible that they will not immediately return to their initial state when exposed to new environments.

Mechanical Friction or Stiction: Hysteresis can be brought on by internal friction in sensors that have moving components. Output readings lag as a result of this friction, which makes it difficult to react to changes quickly.

Thermal Effects: In particular, if the sensor isn't given enough time to establish thermal equilibrium, temperature variations might alter the material's properties and cause a lag or delay in response.

Hysteresis Impact on Sensor Performance and Its Mitigation

Hysteresis can impair the repeatability and dependability of sensor readings, especially in situations where precise control is necessary if the sensor input fluctuates often.

Reducing the impact of hysteresis entails:

Material Selection: Selecting materials with low memory effects can lessen hysteresis. For example, hysteresis effects can be reduced in some pressure sensor types by employing non-elastomeric materials.

Thermal Compensation: Thermally-induced hysteresis can be reduced by incorporating temperature compensation devices or by guaranteeing that the sensor operates within a predetermined temperature range.

Calibration: Sensor calibration on a regular basis might assist in detecting and mitigating hysteresis effects. Certain sophisticated sensors have built-in algorithms for hysteresis correction.

Mechanical Design: Hysteresis can be lessened in sensors with moving parts by using lubricants that reduce stiction, or friction that keeps stationary surfaces from moving.

To summarize, although hysteresis poses a difficulty in numerous sensor applications, its impact may be controlled and reduced via a thorough understanding of its sources and consequences, careful design, and material selection.

Resolution

Resolution becomes apparent as a crucial feature when we go more into the qualities that determine the integrity and usability of sensors. Basically, it is the sensor's capacity to detect even the smallest shift in the quantity being measured and react accordingly.

Definition and Importance

Resolution, to put it simply, is the smallest change in the measured quantity that a sensor can consistently identify and show. For example, a thermometer with a resolution of 0.1°C may detect temperature changes as little as 0.1°C.

Comprehending the resolution is crucial for several reasons:

Clarity of Information: More granular and precise data can be obtained with a better resolution, which presents a clearer picture of changes in the measured parameter.

Enhanced Control: Resolution is essential for maintaining optimal performance, particularly in control systems where even small changes can have big effects.

Distinguishing Noise from Data: In digital systems, inaccurate readings may result from low-resolution sensors' inability to distinguish between minute fluctuations (noise) and real data changes.

Resolution's Role in Determining System Performance

Despite being a standalone characteristic, a sensor's resolution is closely related to the system's overall performance:

Decision-Making: Sensor data is frequently the basis for decision-making in automated systems. High-resolution sensors can provide information that helps with more accurate and well-informed decision-making.

Data Interpretation: Accurate identification of elements or compounds can be achieved in analytical applications such as spectroscopy by using a better resolution to differentiate between closely lying spectral lines.

System Response: The resolution of the sensor can have a direct impact on how feedback-controlled systems react to changing circumstances. For example, a high-resolution temperature sensor can result in more stable and consistent temperature management in a temperature-controlled chamber.

Error Minimization: The resolution of a sensor in measurement systems can have a big impact on the total error budget. This possible source of mistake can be reduced by making sure a sensor has enough resolution for the intended use.

In summary, resolution determines how well a system can operate, respond, or make decisions based on the data supplied by the sensor in addition to being a measure of the sensor's capacity. In order to create smarter, more effective systems, high-resolution sensors are becoming increasingly important as technology develops.

Linearity and Nonlinearity

Understanding the behavior and reaction of a sensor to changing inputs requires an understanding of both linearity and its opposite, non-linearity. This characteristic affects a number of system functioning aspects, particularly in terms of calibration and

Explanation of Sensor Linearity

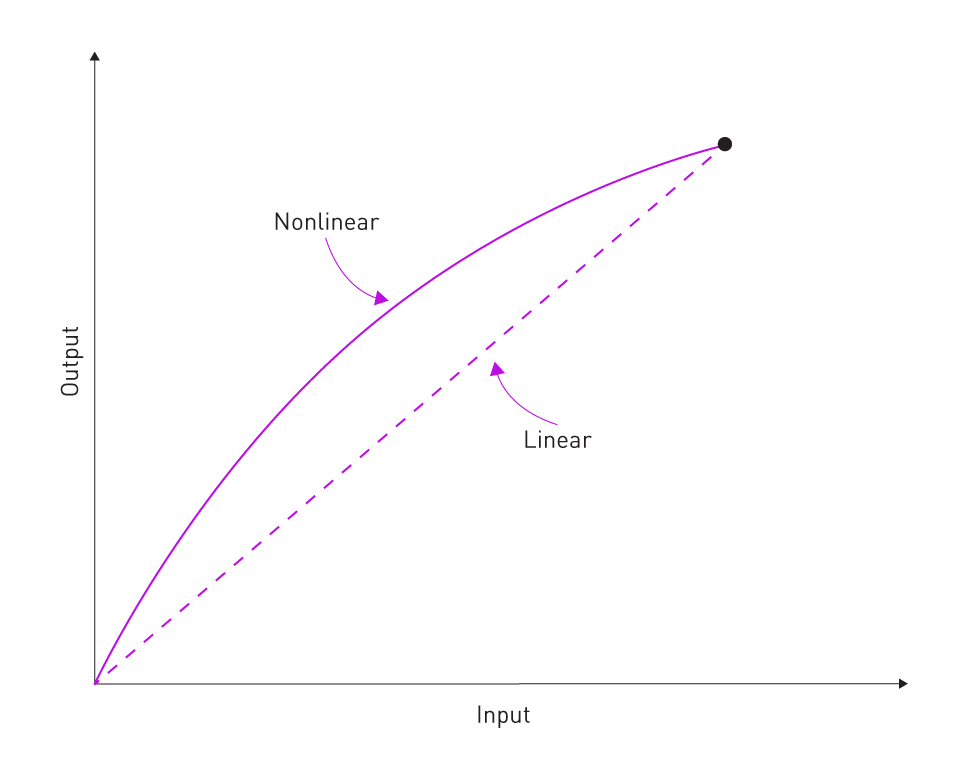

If the output of a sensor varies proportionately with a change in the measured amount within its designated range, the sensor is said to be linear. When the sensor's output is plotted against the measured quantity, the resultant graphic would be a straight line. The sensor's sensitivity should ideally increase with the steepness of this line (slope).

Nonlinearity shows how a sensor's actual response deviates from its predicted linear response. It represents the maximum deviation between the actual and linear responses of the sensor over its operating range and is commonly stated as a percentage of full scale (%FS).

Figure 3: Linear vs. Nonlinear Response

Perfect linearity is uncommon in real-world settings. Sensors are usually non-linear to some extent, although in many cases, this non-linearity is so small as to be insignificant.

Importance of System Calibration and Signal Interpretation

Calibration Simplicity: Since the relationship between input and output can be defined by only two points, usually at the extremes of the range, linear sensors are frequently simpler to calibrate. Conversely, non-linear sensors could require more complex and time-consuming multi-point or curve-fitting calibration methods.

Predictable Response: Predictability is required for systems that depend on sensors. In addition to guaranteeing that outputs are consistent and predictable for given inputs, a linear response streamlines control strategies, data interpretation, and system design.

Accuracy: Errors may be introduced by a sensor's departure from linearity if it has non-linear features and the system's algorithms or control logic hasn't taken this into consideration.

Signal Processing: By eliminating the need for intricate algorithms or compensatory measures to account for non-linearity, linear sensors streamline the signal processing chain. This can result in more effective processing and faster response times in addition to simplifying the design.

System Feedback: Linearity in feedback control systems guarantees that the control action remains constant throughout the sensor's range. Improper management of non-linearities can result in oscillations or instability.

Even though many contemporary sensors and systems are made to effectively manage non-linear properties, many applications still require linearity. It facilitates calibration, simplifies system design, and improves output predictability and reliability. Achieving the best possible system performance requires an understanding of the extent and implications of non-linearity.

Stability and Drift

It is crucial to guarantee steady and reliable results in the ever-changing field of sensor technology. Two essential ideas related to long-term sensor performance measurements are stability and drift.

Cause of Sensor Drift

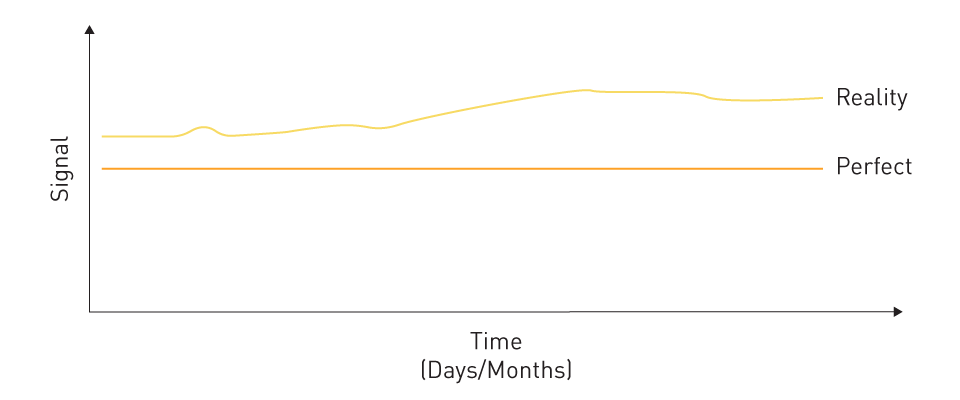

Drift describes an unwanted shift in sensor output when the input doesn't change. Instead of occurring suddenly, this variation is usually noticed over long periods of time and might be attributed to age, environmental variables, or intrinsic properties of the sensor materials.

Figure 4: Signal Drift

Sensor drift can be caused by various factors, such as:

Aging of Components: The materials used in sensors can be impacted by time or wear and tear, which might result in changed properties.

Thermal Effects: Temperature variations can affect the resistance, capacitance, and other characteristics of sensor parts. When exposed to abrupt or drastic temperature fluctuations, even sensors that have temperature correction may drift.

Mechanical Stress: Particularly in sensors that depend on material deformation or movement, physical strain or repeated use might result in mechanical changes that induce drift.

Chemical Contamination: Sensor components may be impacted in situations containing volatile chemicals or moisture, which could eventually cause drift.

Electronic Noise: Drift over long periods of time can also be the result of low-level interference or noise building up in electrical components.

Methods to Minimize and Compensate for Drift

The first step in reducing and offsetting drift is identifying its underlying cause. The following are some tactics:

Calibration: Frequent recalibration may explain the drift. Periodically setting new reference points helps maintain the accuracy of the sensor's outputs.

Temperature Compensation: Thermal effects can be lessened by monitoring temperature with additional sensors and modifying the sensor output accordingly.

Material Choice: Drift can be naturally decreased by choosing materials that are resilient to mechanical loads, age, and chemical impacts. This could entail choosing materials that are better quality or made with stability in mind.

Differential Measurement: Drift can be minimized or eliminated by taking a reference measurement in addition to the intended measurement. This way, the difference will always remain consistent and both measurements will drift in the same direction.

Feedback Systems: For real-time drift compensation, feedback methods that continuously modify and correct the sensor output can be useful.

Environmental Control: Drift can be greatly reduced if the temperature, humidity, and other environmental conditions are kept constant.

Advanced Signal Processing: The system processing chain can be enhanced by integrating algorithms that use machine learning techniques or trends to identify and rectify drift.

Shielding and Protection: The durability of sensors can be extended by shielding them from outside influences such as dust, moisture, or chemical pollutants by coatings, enclosures, or seals.

In conclusion, although drift is an inevitable part of most sensors, it is important to comprehend its causes and put strategies in place to lessen its impacts. It is possible to guarantee that sensors produce accurate and dependable data for extended periods of time with regular maintenance, proper system design, and sophisticated compensatory mechanisms.

Common Mode Rejection (CMR)

Common mode rejection (CMR) is an important parameter in the context of sensor and signal conditioning circuits, particularly in applications where noise and interference are prevalent.

Definition and Importance

Common Mode Rejection (CMR) refers to the ability of a sensor circuit, particularly a differential sensor or amplifier, to reject signals that are common to both input terminals. These common-mode signals often originate from external interference, such as electromagnetic noise or ground potential differences, and can distort the sensor's output if not properly attenuated. CMR is important because it ensures that the measured signal accurately reflects the variation in the physical quantity being measured, while rejecting unwanted noise and interference.

Improving CMR

Several techniques can be employed to enhance CMR in sensor circuits:

Differential Signal Processing: Utilize a differential amplifier or sensor configuration to amplify the voltage difference between the sensor's output terminals while rejecting common-mode signals.

Balanced Sensor Design: Design the sensor with balanced input and output impedances to minimize the effects of common-mode signals. Balanced circuits help ensure that any common-mode signals present are equally attenuated at both input terminals, resulting in improved CMR.

Shielding and Grounding: Implement shielding techniques to protect the sensor from external electromagnetic interference. Proper grounding practices help minimize ground loops and reduce the impact of ground potential differences on the sensor's output.

Signal Filtering: Incorporate filtering circuitry to attenuate common-mode noise within the sensor circuit. Low-pass filters, for example, can selectively pass the desired signal while attenuating high-frequency noise and interference.

Isolation: Use isolation techniques, such as optocouplers or transformers, to electrically isolate the sensor circuit from external interference sources. Isolation helps prevent common-mode signals from coupling into the sensor circuit, thus improving CMR.

Dv/dt Immunity

In sensor circuits and signal processing systems, rapid changes in voltage can lead to noise and disturbances that can affect the accuracy and reliability of measurements.

Definition and Importance

dv/dt refers to the rate of change of voltage with respect to time. In sensor circuits, dv/dt immunity represents the ability of a sensor to maintain stable operation and accurate measurements in the presence of rapid changes in voltage across the circuit or in the environment. Maintaining high dv/dt immunity is important for ensuring the reliability and accuracy of sensor measurements, particularly in applications where sensors are exposed to dynamic and noisy environments.

Improving dv/dt Immunity

Several strategies can be employed to enhance dv/dt immunity in sensor circuits:

Noise Filtering and Shielding: Implementing noise filtering techniques such as low-pass filters can help attenuate high-frequency voltage transients that adversely affect sensor performance. Additionally, shielding sensitive components or signal lines from external electromagnetic interference (EMI) sources can reduce the susceptibility to dv/dt-induced noise.

Transient Voltage Suppressors: Transient voltage suppressor diodes or surge protection devices provide a low-impedance path to ground for transient events, diverting excess energy away from sensitive circuit components.

Component Selection: Choose sensor components with robust electrical characteristics and transient immunity ratings. Components rated for high surge currents and transient voltages are better suited to withstand dv/dt disturbances and maintain stable operation in challenging environments.

Isolation: Implement isolation techniques, such as optical isolation or galvanic isolation, to electrically separate the sensor from external disturbances. Isolation helps prevent transient events from propagating into the sensor circuit, preserving its integrity and performance.

直接登录

创建新帐号